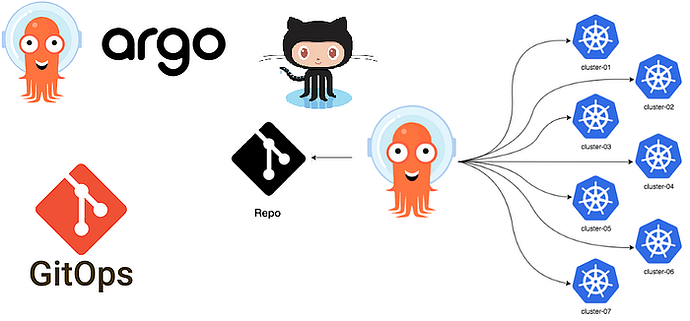

In the world of GitOps, managing Kubernetes resources and organizing application deployments effectively can make all the difference in creating a streamlined, efficient workflow. When adopting GitOps tools like ArgoCD, teams often find themselves facing questions about how to properly structure repositories and folders to manage different environments, maintain clarity, and minimize risks. This blog will provide insights into best practices for structuring GitOps repositories, with a focus on ArgoCD and Kubernetes applications.

Why GitOps Structure Matters

GitOps is a methodology where the source of truth for infrastructure and deployments is maintained in Git repositories. The repository not only serves as the central place for defining and managing configurations, but also helps in maintaining the integrity of deployments across different environments (e.g., dev, staging, production).

A well-structured GitOps repository:

- Supports Team Collaboration: It allows multiple teams (such as developers, platform engineers, and operations) to contribute, make updates, and see the infrastructure changes clearly.

- Reduces Deployment Risks: By clearly defining environments and separating infrastructure from application code, a good structure minimizes the chance of accidental changes that might impact critical environments.

- Facilitates Automated Processes: It is the foundation of automation. ArgoCD relies on the structure of the repository to identify the right application configurations for deployment.

General Approaches to Structuring GitOps Repositories

There are several strategies to structure your GitOps repositories, and the right one often depends on the size of the team, the environments you’re managing, and the complexity of the applications being deployed. Below are the most common approaches.

1. Separate Repositories for Infrastructure and Application Code

A common practice is to have separate repositories for the following:

- Infrastructure as Code (IaC): Terraform or Kubernetes manifests that deal with creating underlying resources such as VPCs, databases, Kubernetes clusters, etc.

- Application Code and Configurations: Code that defines the applications, along with their Kubernetes manifests, such as deployment specs, services, etc.

The main advantage of separating infrastructure from application code is lifecycle decoupling. Infrastructure elements (e.g., networking, security) change less frequently compared to applications, which may be updated regularly. Having separate repositories helps to:

- Simplify Versioning: It allows infrastructure updates and application deployments to be versioned independently.

- Reduce Complexity for Developers: Developers can focus on application code without worrying about underlying infrastructure, and platform engineers can maintain infrastructure without disrupting application deployment.

2. Organizing Environment-Specific Folders

For GitOps to manage multiple environments (e.g., dev, staging, production), it is critical to keep configurations for different environments separated but consistent.

The typical folder structure looks like this:

├── base/

│ ├── kustomization.yaml

│ ├── deployment.yaml

│ ├── service.yaml

│ └── config.yaml

├── environments/

│ ├── dev/

│ │ ├── kustomization.yaml

│ │ └── patches.yaml

│ ├── staging/

│ │ ├── kustomization.yaml

│ │ └── patches.yaml

│ └── production/

│ ├── kustomization.yaml

│ └── patches.yaml

Base Directory: This contains the shared components that are consistent across all environments. This might include core manifests like deployments, services, and default configuration maps.

Environments Directory: This houses overlays for each environment, such as development (dev), staging (staging), and production (production). These environment-specific folders often use tools like Kustomize to apply specific patches to the base configuration, allowing for minor variations between environments without duplicating the entire manifest.

3. Using Multiple Repositories

When multiple teams and services are involved, using separate repositories for different aspects of deployment can enhance scalability and modularity. A typical breakdown includes:

- Cluster Infrastructure Repo: This repository contains Terraform configurations or other infrastructure code responsible for provisioning clusters.

- Shared Services Repo: This repo manages common services deployed in Kubernetes clusters, such as observability tools (Prometheus, Grafana), service meshes, ingress controllers, etc.

- Application Repos: Each application has its own repository, containing the application code and its Kubernetes manifests.

- GitOps Configuration Repo: This repository is dedicated to maintaining the configuration that ArgoCD or Flux uses to deploy resources to Kubernetes. It includes references to other repositories, enabling continuous deployment.

Managing ArgoCD Applications Across Repositories

ArgoCD, as a GitOps operator, uses Git repositories as the source of truth for managing Kubernetes applications. It supports various formats, including Helm charts, Kustomize, and plain Kubernetes manifests. Below are best practices for managing ArgoCD applications across repositories.

1. Keep GitOps Configuration in a Dedicated Repository

A dedicated GitOps repository helps in maintaining a clear boundary between the application and its deployment configuration. For instance, while an application repository may contain the source code and Dockerfiles, the GitOps repository will have the ArgoCD application configurations. This clear separation:

- Simplifies Management: Developers can focus on code changes without affecting deployment, while platform teams can ensure deployments are configured as per best practices.

- Improves Access Control: Sensitive deployment information can be controlled separately, reducing access to critical infrastructure.

2. Use the App of Apps Pattern

The “App of Apps” pattern is a strategy commonly used in ArgoCD to manage multiple applications from a single configuration point. In this pattern, a parent ArgoCD application contains references to child applications, enabling better scalability.

The repository structure might look like this:

├── apps/

│ ├── app1.yaml

│ ├── app2.yaml

│ ├── shared-services.yaml

│ └── app-of-apps.yaml

App-of-Apps: The parent app-of-apps.yaml file contains a reference to all the child applications. Each child application YAML references a different service, such as databases, APIs, or frontends. This makes managing multiple applications much simpler and allows you to version-control different groups independently.

How to Gate Infrastructure-as-Code Changes Across Environments

Managing changes across different environments is crucial for preventing unintentional impacts on production environments. Here are some effective ways to achieve this:

1. Environment Promotion Through Pull Requests

To ensure changes are carefully reviewed before being promoted across environments, use Git branches and pull requests:

- Changes to infrastructure or application configurations are initially made to the

devbranch or folder. - After testing and validation, changes can be merged into the

stagingbranch, followed byproduction. - Pull requests help ensure that all modifications are reviewed, reducing the likelihood of unintended changes.

2. Utilize GitOps Tools for Automated Promotion

Some GitOps tools, such as ArgoCD, support automated promotions between environments. However, to maintain a controlled workflow, automatic deployments should generally be restricted to non-production environments. In production, deployments can be gated through:

- Manual Approvals: Set up manual approval workflows in CI/CD tools like Jenkins, GitHub Actions, or GitLab CI before merging to production.

- Deployment Locks: Tools like ArgoCD offer “sync waves” or deployment locks to help manage the promotion of changes across different environments in a gated, methodical manner.

Dealing with Environment-Specific Values

A frequent challenge is managing configurations that differ between environments, such as DNS names or database credentials. To tackle these challenges effectively:

1. Kustomize Overlays

Kustomize is a good solution for managing differences between environments. The base manifests remain the same across environments, while overlays contain patches that introduce environment-specific changes. For example, a different volume mount or replica count could be specified in the overlay specific to each environment.

2. Centralized Parameter Store

Instead of hard-coding sensitive information into configuration files, use a centralized parameter store, such as AWS SSM Parameter Store or HashiCorp Vault, to store environment-specific values. Tools like Kustomize Secrets can then pull these secrets dynamically during deployment, reducing duplication and enhancing security.

3. Use Parameter Files

Instead of duplicating configuration files across environments, use parameter files (e.g., dev.yaml, prod.yaml). These files define only what is different across environments, such as environment variables or resource limits, and can be referenced by ArgoCD to apply the correct configurations during deployment.

Handling Changes Gradually

To prevent unintentional impacts, a key best practice is to gradually roll out changes:

- Make Changes First to Dev Environment: Changes are initially made in the

devfolder or repository. - Promote After Testing: Once validated, changes are promoted to

stagingand eventuallyproduction. To streamline this process, use automation tools that allow controlled promotion and provide a clear audit trail for changes. - Monitor and Revert: Always monitor deployments and establish mechanisms for reverting to the previous state if necessary.

Conclusion

Structuring repositories for GitOps with tools like ArgoCD may seem overwhelming at first, but taking the time to define a clear and consistent structure can lead to greater scalability, better security, and improved collaboration across teams. The key is to separate what is logically different — such as infrastructure from applications, and environments from one another — while also automating as much of the process as possible to reduce manual intervention and risk.

The strategy that works best for you will depend on your organization’s specific needs, including how your teams are structured, the level of Kubernetes expertise, and the scale of your deployment. By adhering